@ling775000 人家那意思就是统计数据规模和字段详情吧,这个是需要统计的,然后同比环比进行对比

- 数据清洗之后还需要统计啥的吗?

- CDH 意外中断导致 hue 调用 hive 查表时无法查询表,提示表不存在,如何修复该问题?大神请帮帮忙

- CDH 意外中断导致 hue 调用 hive 查表时无法查询表,提示表不存在,如何修复该问题?大神请帮帮忙

- CDH 意外中断导致 hue 调用 hive 查表时无法查询表,提示表不存在,如何修复该问题?大神请帮帮忙

- sqoop 连接数据库密码方式?

- HBASE 新增节点上去的话,原先创建的预分区会有啥变化?

- HBASE 如何查看一个表有没有做了预分区?

- hadoop 在执行 wordcount 时,job 卡住不继续执行?

- 数据清洗之后还需要统计啥的吗?

- 数据清洗之后还需要统计啥的吗?

- wordcount 执行不了,查日志提示 maximum-am-resource-percent is insufficient,应该怎么设置?

- webservice 取值问题

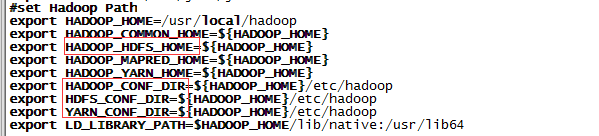

- Hadoop 部署集群时节点无法启动问题?

- java 问题解决

- spark 读取数据 split 问题?